Abstract

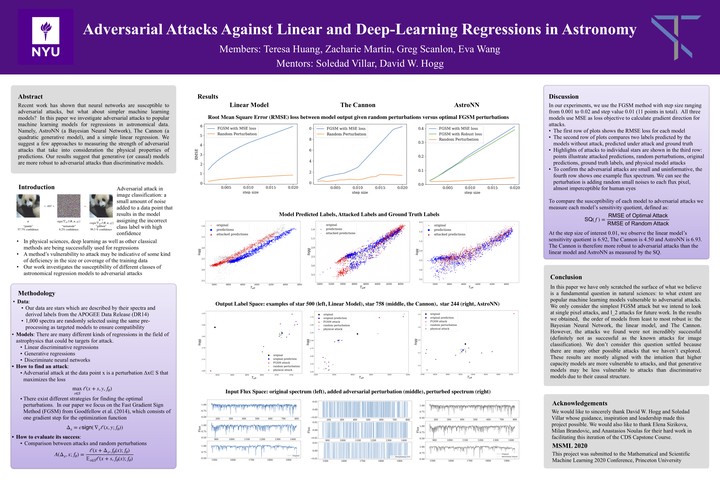

Recent work has shown that neural networks are susceptible to adversarial attacks, but what about simpler machine learning models? In this paper we investigate adversarial attacks to popular machine learning models for regressions in astronomical data. Namely, AstroNN (a Bayesian Neural Network), The Cannon (a quadratic generative model), and a simple linear regression. We suggest a few approaches to measuring the strength of an adversarial attacks that take into consideration the physical properties of the predictions. Our results suggest that generative (or causal) models are more robust to adversarial attacks than discriminative models.

Type