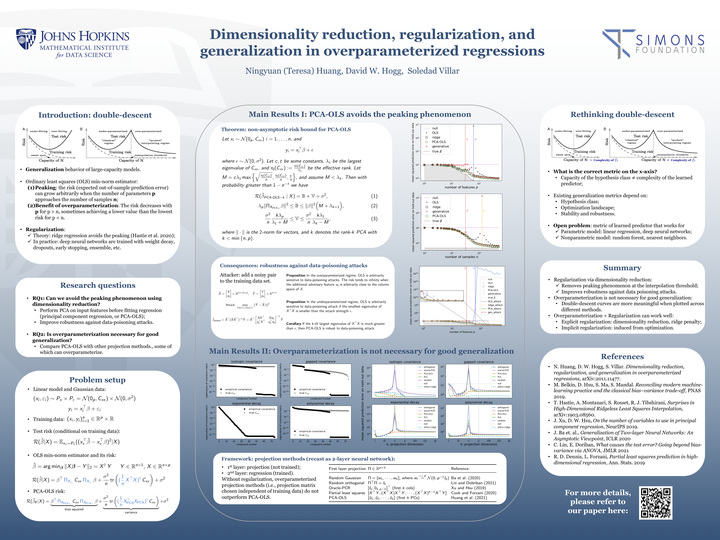

Dimensionality reduction, regularization, and generalization in overparameterized regressions

Abstract

Overparameterization is powerful: Very large models fit the training data perfectly and yet often generalize well. The risk curve of these models typically shows a double-descent phenomenon, characterized by peaking at the interpolation threshold and decreasing risk in the overparameterized regime. The double-descent behavior raises the following research questions: Can we avoid the peaking phenomenon? Is overparameterization necessary for good generalization? In this work, we proved that the peaking disappears with dimensionality reduction by providing non-asymptotic risk bounds. We compared a wide range of projection-based regression models and found that overparameterization may not be necessary for good generalization.

Type

Publication

SIAM Journal on Mathematics of Data Science